Research

Camera-Based Visibility Estimation

Atmospheric visibility is an important meteorological quantity, with diverse applications from aeronautical meteorology to climatology. Visibility is traditionally estimated from visual observations and measured using scatterometers and transmissometers. These instruments provide automated measurements, but only at a small number of sites, and only of a small atmospheric volume.

We are therefore engaged in an effort to increase the spatial and temporal availability of visibility estimates. Using outdoor web cameras for this task has several benefits. In particular, cameras record the incoming light from many directions at once, enabling more descriptive characterizations when the atmosphere is not homogeneous, such as minimum-maximum ranges of visibility, histograms for different sectors or map-based visualizations.

CIMO TECO 2024 paper and presentation | GitHub repository | Zenodo upload

MET Alliance ET AUTO OBS 2020 presentation

Photographic Visualization of Weather Forecasts

Outdoor webcam images visualize multiple aspects of the past and present weather, and are also easy to interpret. They are therefore consulted by meteorologists and the general public alike. Weather forecasts, in contrast, are communicated as text, pictograms or charts, each focusing on separate aspects of the future weather. We propose a method that uses photographic images to also visualize future weather conditions.

This is challenging, because photographic visualizations of weather forecasts should look real, be free of obvious artifacts, and should match the predicted weather conditions. The transition from observation to forecast should be seamless, and there should be visual continuity between images for consecutive lead times. We use conditional Generative Adversarial Networks to synthesize such visualizations. The generator network, conditioned on the analysis and the forecasting state of the numerical weather prediction (NWP) model, transforms the present camera image into the future. The discriminator network judges whether a given image is the real image of the future, or whether it has been synthesized. Training the two networks against each other results in a visualization method that scores well on all four evaluation criteria.

AIES 2023 paper | GitHub repository | Zenodo upload

Colloquium in Climatology, Climate Impact and Remote Sensing 2022 presentation

RSI TV segment "Il Quotidiano" (2024-02-14)

Probabilistic Plausibility of Surface Data

At MeteoSwiss, the quality control (QC) of surface data occurs at several steps along the data processing chain: in the instrument itself, in the logger, during import into the data warehouse, and hours, days and even years later. The early tests act in real-time, but are very limited in scope. They can only find implausible measurements if they are physically impossible. Later tests are more sophisticated and often involve measurements from other instruments and sites. They find more implausible measurements, but also generate false positives.

It is a challenge to combine the quality information (QI) from all independent QC systems. We propose to use the probabilistic plausibility of the measurement, represented on a continuous scale between 0 (impossible) and 1 (confirmed by an expert). The probabilistic plausibility is calculated from the a-priori plausibility of the measurement parameter, all available test outcomes and possible expert inspection, following the well-known statistical procedure called Naïve Bayes. Using this procedure, each test outcome influences the probabilistic plausibility according to the strengh of its evidence. And as soon as a new test outcome is available, the plausibility of the measurement can be updated efficiently.

Summarizing all available QI in a single number helps our customers to set an appropriate threshold on the minimum data quality that they need for their use case.

EUMETNET STAC WG AQC 2019 presentation

EUMETNET DMW 2017 presentation

Learning Dictionaries with Bounded Coherence

Over-complete dictionaries can achieve a lower approximation error than orthogonal dictionaries in sparse coding applications. On the one hand, increasing the number of dictionary atoms leads to sparser solutions. On the other hand, a dictionary with low self-coherence has several advantages, such as a more rapid decay of the residual error. We propose a dictionary learing algorithm that can achieve any trade-off between both objectives.

IEEE SPL 2012 paper | Further material

Speech Enhancement with Sparse Coding in Learned Dictionaries

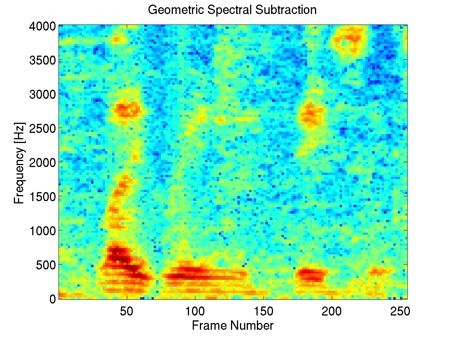

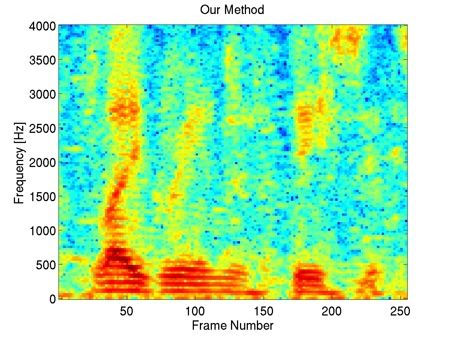

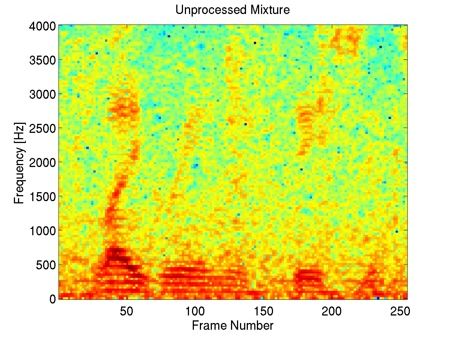

Speech enhancement is difficult if the target and interferer sources are partially coherent, such as for speech in the presence of babble noise. We propose a sparse coding algorithm (called LARC) for training dictionaries and enhancing speech in the presence of challenging non-stationary interferers.

IEEE Trans ASLP 2012 paper | Further material | Matlab toolbox larc-0.1.zip

ICASSP 2010 paper | Further material

EM for Sparse and Non-Negative PCA

Classical principal component analysis produces full projection vectors with mixed signs. For some applications, sparse and/or non-negative solutions are more appropriate. We propose an algorithm that is efficient for large and high-dimensional datasets and can handle the case where the number of features exceeds the number of observations.

ICML 2008 paper | Matlab toolbox emPCA-0.4.zip | R package

Since the publication of the ICML paper, the method has been substantially expanded, and a mature implementation is available as an R package. I also wrote up an example from the domain of portfolio optimization, and compared different methods on a gene expression data set.

Non-Negative CCA for Audio-Visual Source Separation

We apply canonical correlation analysis to the task of audio-visual source separation. By enforcing the proper constraints on the audio and video projection vectors, we are able to identify sources in video and acoustically separate them with the help of a microphone array.

MLSP 2007 paper | NIPS 2006 workshop paper | R package

The algorithm presented in the MLSP paper has also been substantially expanded into a general purpose R package for sparse and non-negative canoncial correlation analysis. A demonstration is given in this blog post.